Paper Note | SymLM: Predicting Function Names in Stripped Binaries via Context-Sensitive Execution-Aware Code Embeddings

Publication: CCS 2022

论文摘要

Predicting function names in stripped binaries is an extremely useful but challenging task, as it requires summarizing the execution behavior and semantics of the function in human languages. Recently, there has been significant progress in this direction with machine learning. However, existing approaches fail to model the exhaustive function behavior and thus suffer from the poor generalizability to unseen binaries. To advance the state of the art, we present a function Symbol name prediction and binary Language Modeling (SymLM) framework, with a novel neural architecture that learns the comprehensive function semantics by jointly modeling the execution behavior of the calling context and instructions via a novel fusing encoder. We have evaluated SymLM with 1,431,169 binary functions from 27 popular open source projects, compiled with 4 optimizations (O0-O3) for 4 different architectures (i.e., x64, x86, ARM, and MIPS) and 4 obfuscations. SymLM outperforms the state-of-the-art function name prediction tools by up to 15.4%, 59.6%, and 35.0% in precision, recall, and F1 score, with significantly better generalizability and obfuscation resistance. Ablation studies also show that our design choices (e.g., fusing components of the calling context and execution behavior) substantially boost the performance of function name prediction. Finally, our case studies further demonstrate the practical use cases of SymLM in analyzing firmware images.

解决的问题与创新点

此篇工作着重于恢复stripped binary中的函数名称。作者认为之前的工作存在两点不足:一方面,一些工作没有考虑函数的dynamic behavior,因此难以应对由于优化级别、Architecture变化带来的二进制变化;此外,一些工作缺少对调用上下文的考虑,使得ML model不能充分地理解函数的实际行为。

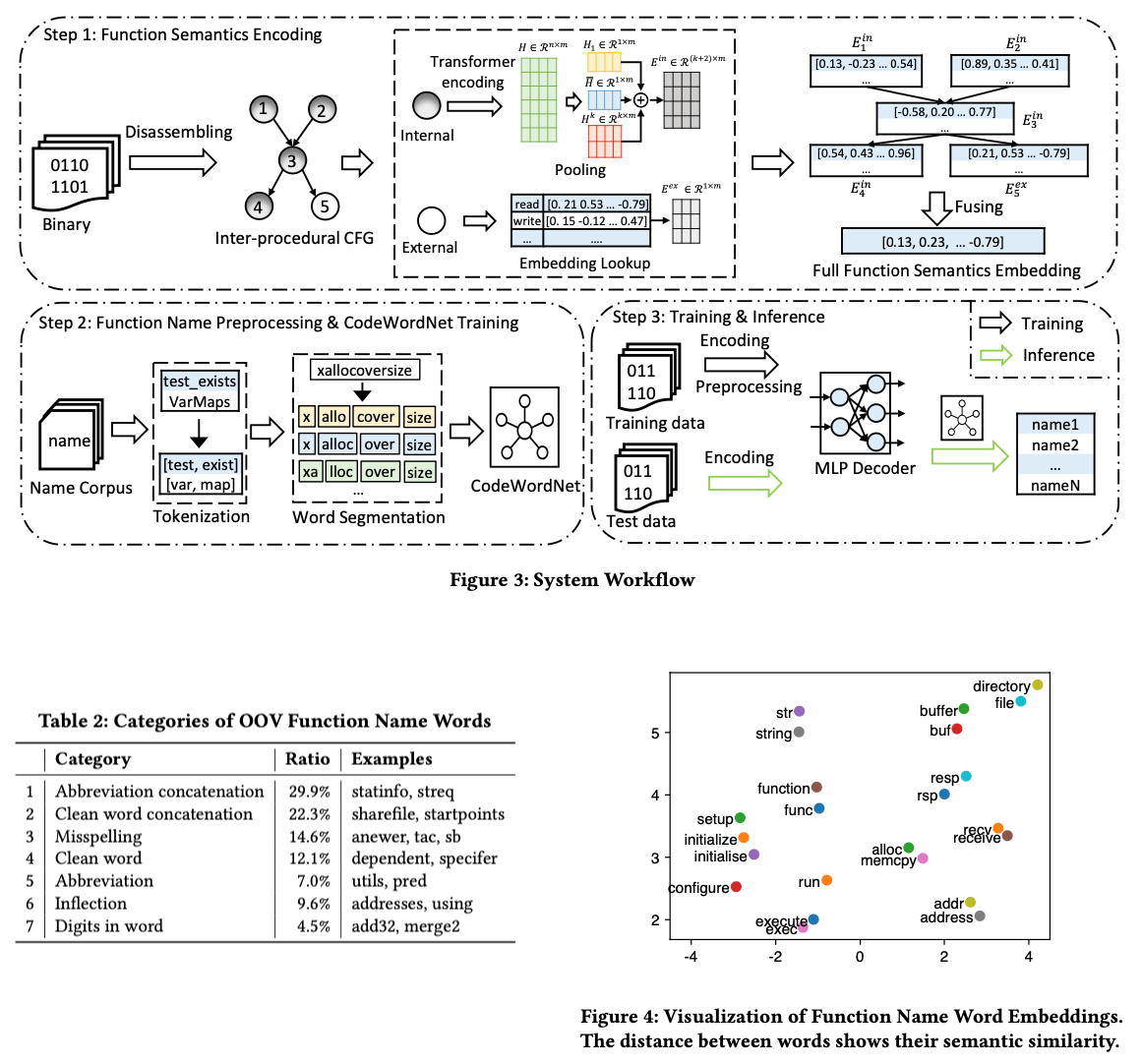

针对此,此篇工作改进TREX提出的Microtrace-Based Pretraining进行嵌入来捕获function instruction的动态特性(SymLM generates semantic embeddings of every token in function instructions I by the microtrace-based pre-trained model),实现题目所说的execution-aware;并基于函数调用关系来将调用与被调用者的嵌入合并到当前函数,实现题目所说的context-sensitive。

此外,工作的另一个重点是处理ground truth中函数命名不规范导致的OOV(Out Of Vocabulary)问题。在一些预处理方式之外,工作训练了CodeWordNet来对函数名中的词语进行嵌入,捕获其语义。

声明的贡献

-

We design a novel neural architecture, SymLM, to learn the comprehensive function semantics preserved in the execution behavior of the calling context and function instructions.

-

We build the CodeWordNet module to measure semantic distance of function names with the domain-specific distributed representations and address OOV problems by preprocessing.

-

We advance the state-of-the-art of function name prediction by outperforming existing works and show the generalizability, obfuscation resistance, component effectiveness, and the practical use case of SymLM.

总体方法